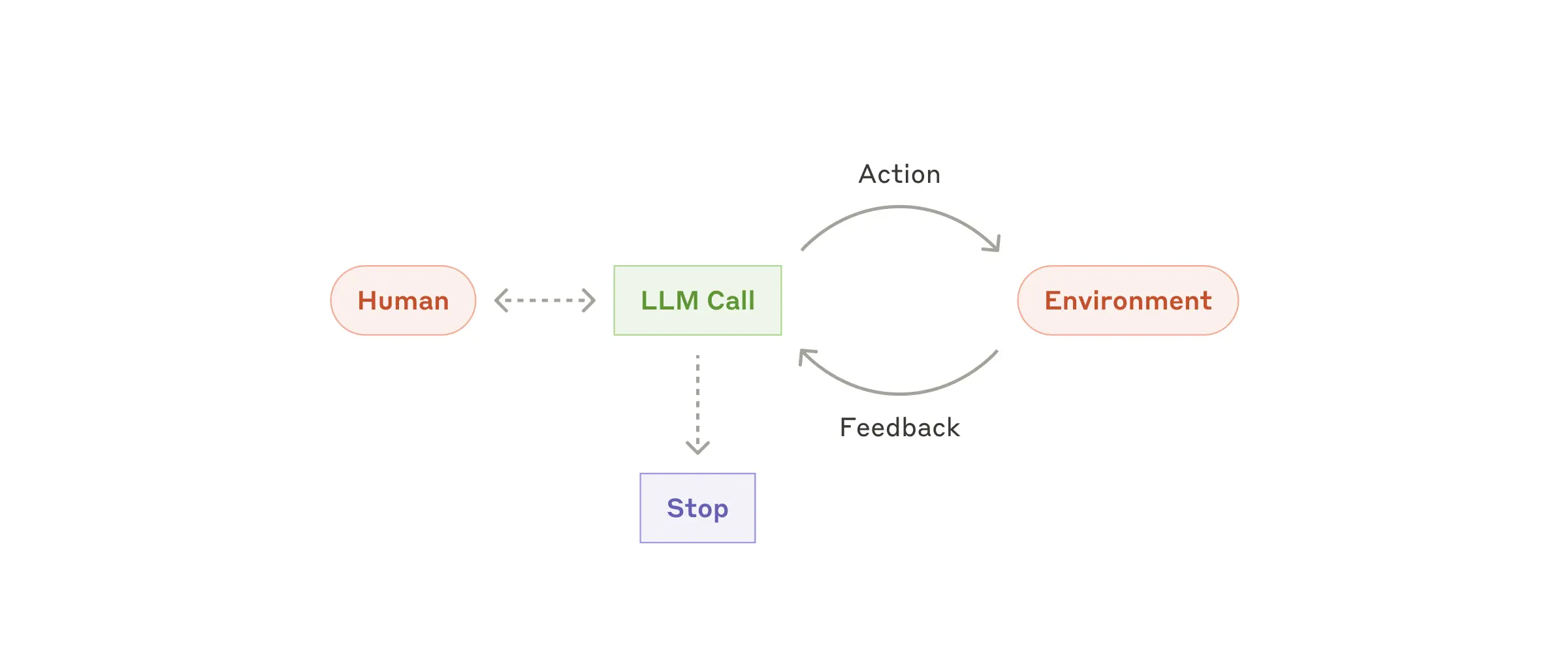

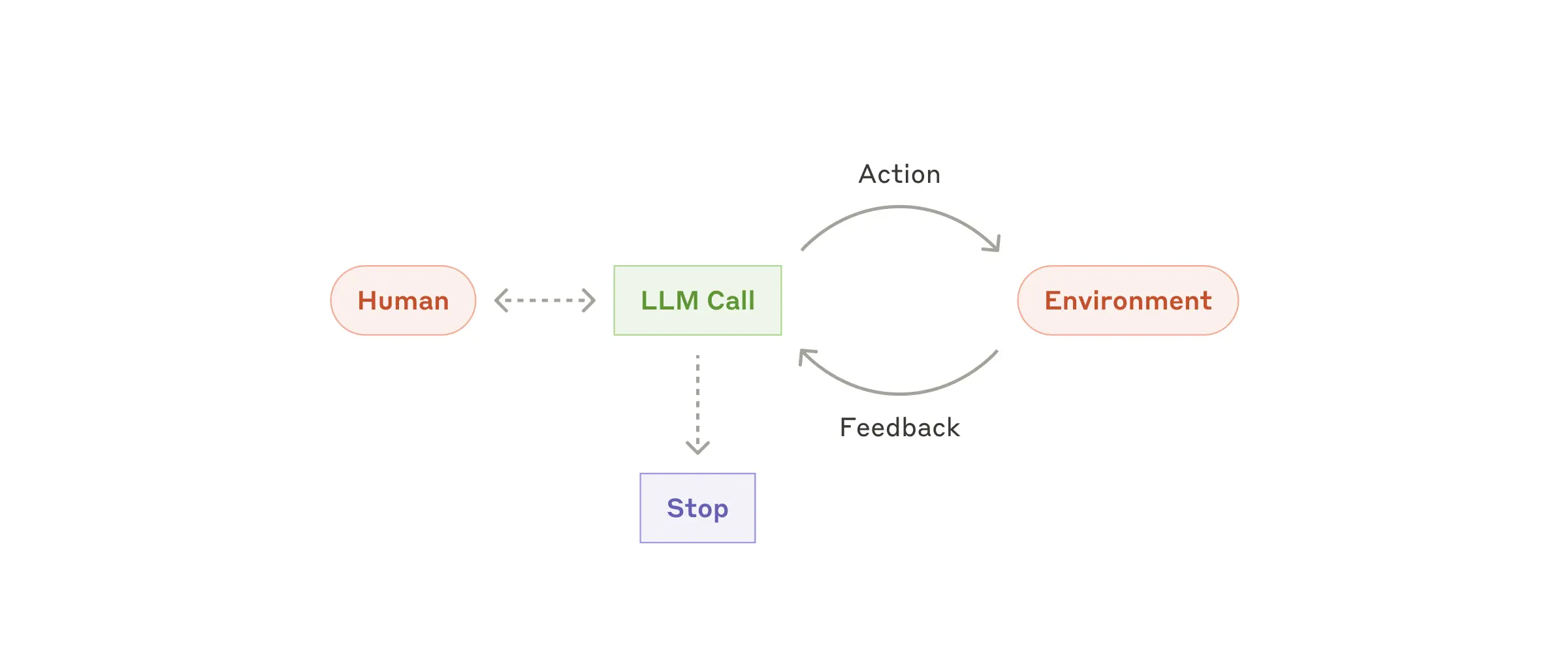

Overview of an AI agent, figure from Building Effective Agents

This reading note is a curated summary of papers and blog posts on AI agents. Many texts are directly quoted or adapted from the original sources. The primary goal is to consolidate and summarize key resources on AI agents for easy reference and study. Updates will be added as new developments arise.

Note: Touch-ups by chatGPT.

Qinyuan Wu, last updated: 2025.01.15

What’s the difference between AI agents and AI models?

Overview of an AI agent, figure from Building Effective Agents

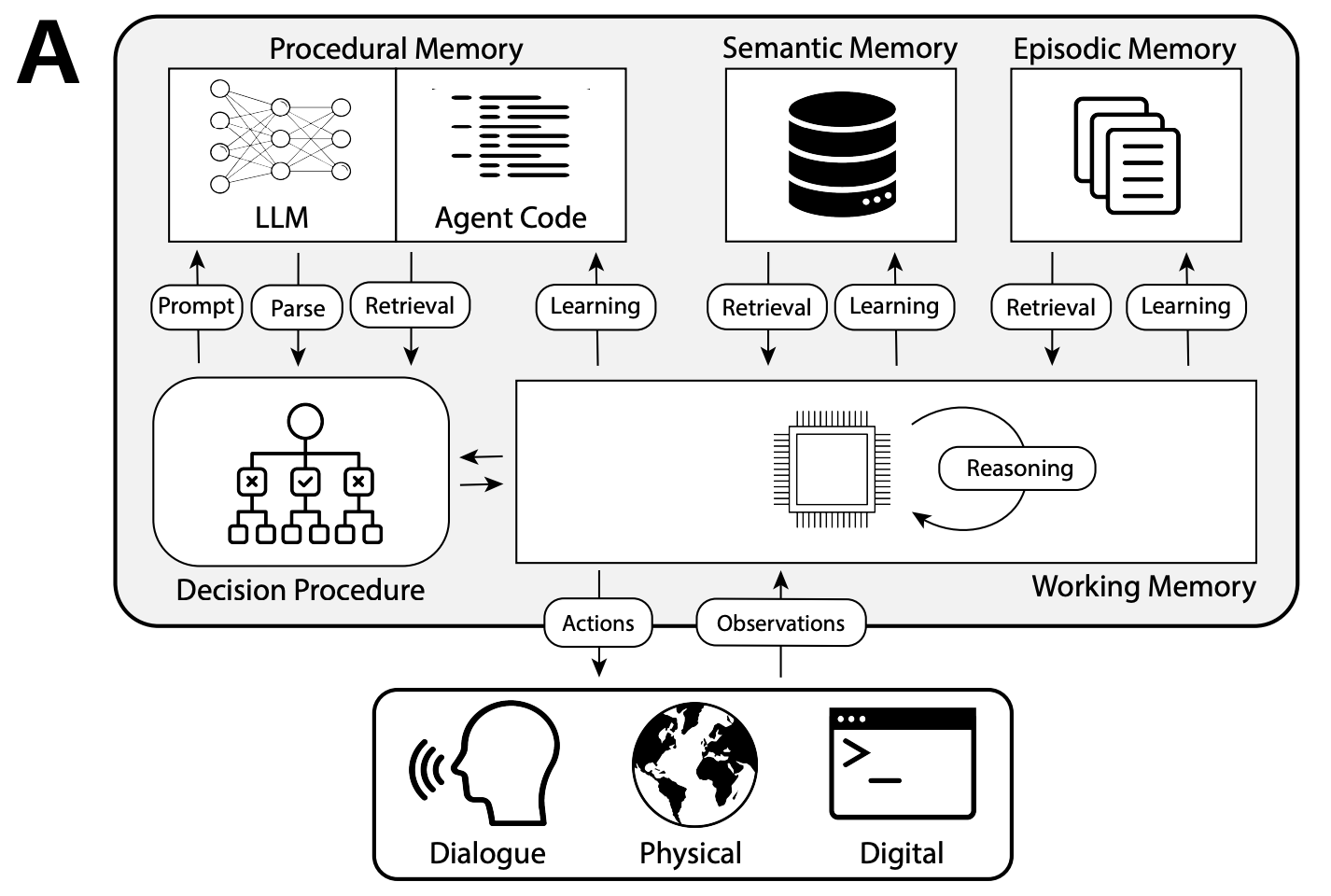

Agents achieve their goals using cognitive architectures that process information iteratively, make informed decisions, and refine actions based on previous outputs.

Chain of Thought (CoT): A standard prompting technique instructing models to “think step by step,” breaking complex tasks into simpler steps. CoT enhances performance by utilizing more test-time computation and making the model’s reasoning process interpretable.

Tree of Thoughts (ToT): Extends CoT by exploring multiple reasoning possibilities at each step. Problems are decomposed into thought steps, generating multiple thoughts per step, forming a tree structure. Searches can use BFS (breadth-first search) or DFS (depth-first search), with states evaluated by classifiers or majority vote.

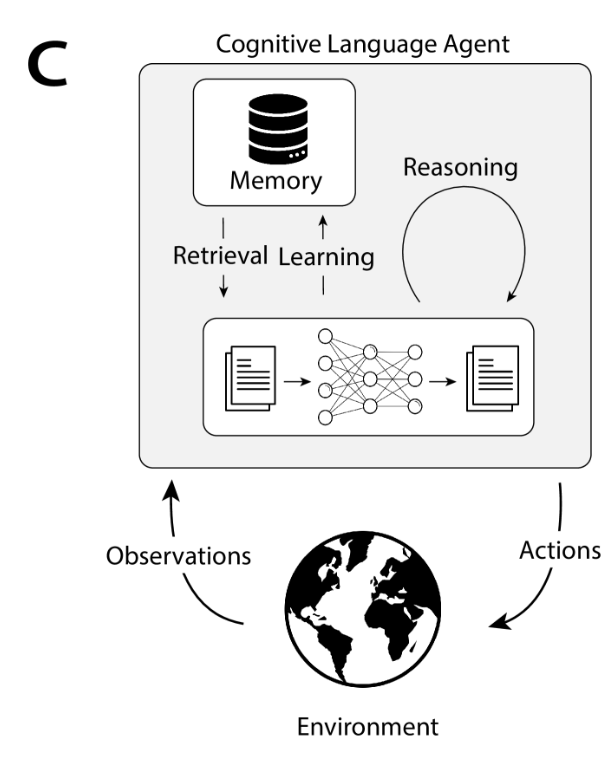

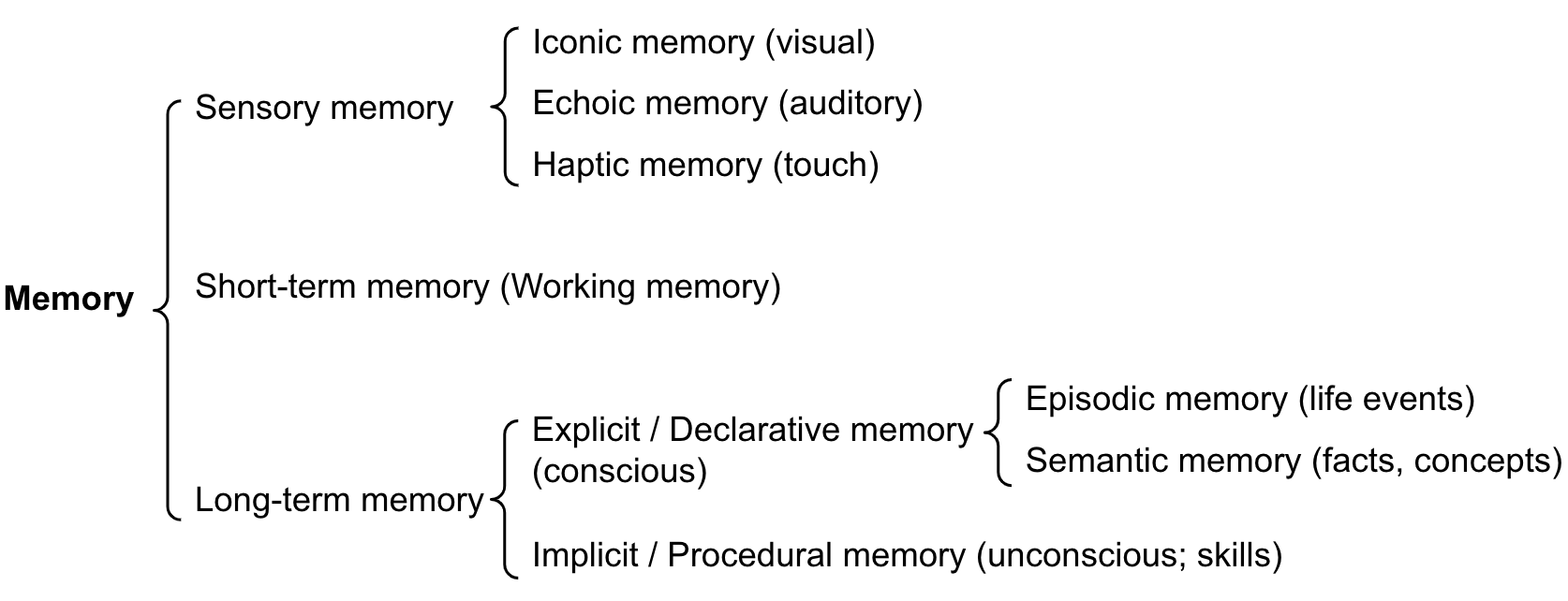

Memory plays a crucial role in an agent’s reasoning process:

Figure from Cognitive Architectures for Language Agents

Human Brain vs. Agent Memory

Human cognitive architecture broadly includes:

Figure from Lil’s log: LLM Powered Autonomous Agents

Some researchers suggest aligning an agent’s cognitive architecture with the human brain’s.

Figure from Cognitive Architectures for Language Agents

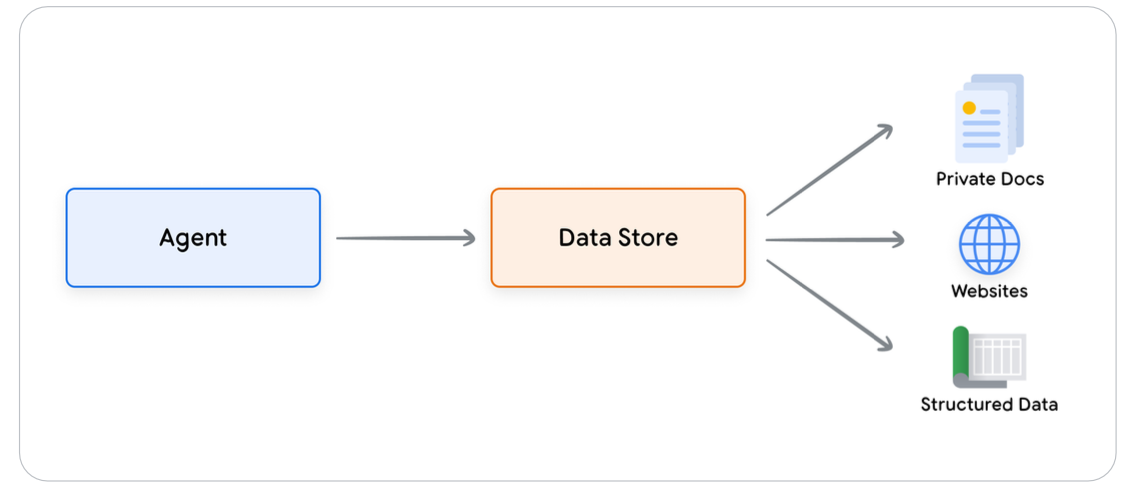

Data stores convert incoming documents into vector database embeddings, allowing agents to extract necessary information. For example, Retrieval-Augmented Generation (RAG) uses vector embeddings to retrieve contextually relevant information.

Figure from Google White Book: Agents

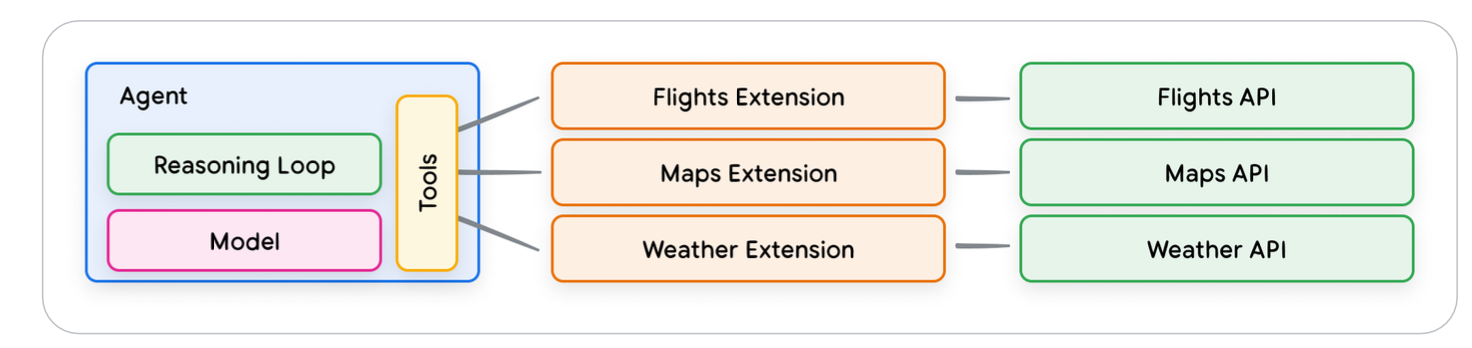

Extensions bridge the gap between agents and APIs by teaching the agent:

Figure from Google White Book: Agents

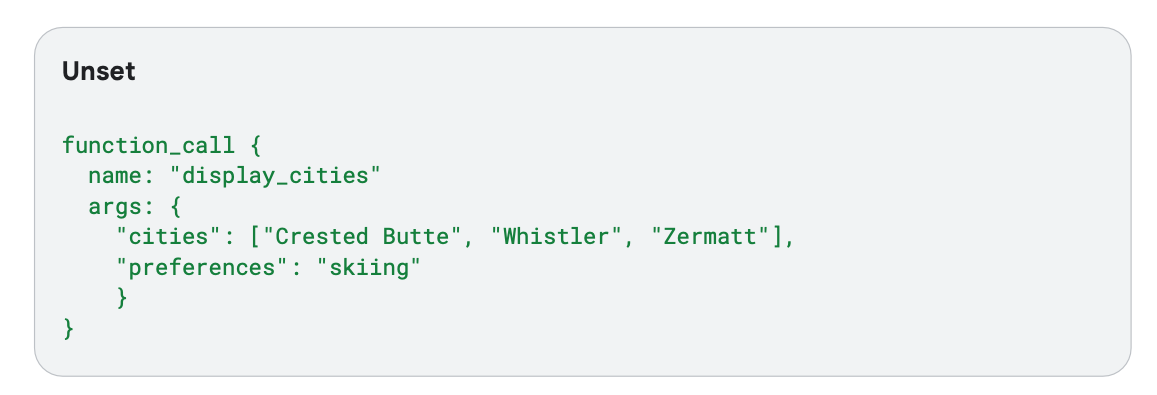

Functions provide developers more control over API execution and data flow. For instance, a user requesting a ski trip suggestion might involve:

Initial output from Ski Model

The model’s output can be structured in JSON for easier parsing:

Ski Model Output in JSON Format

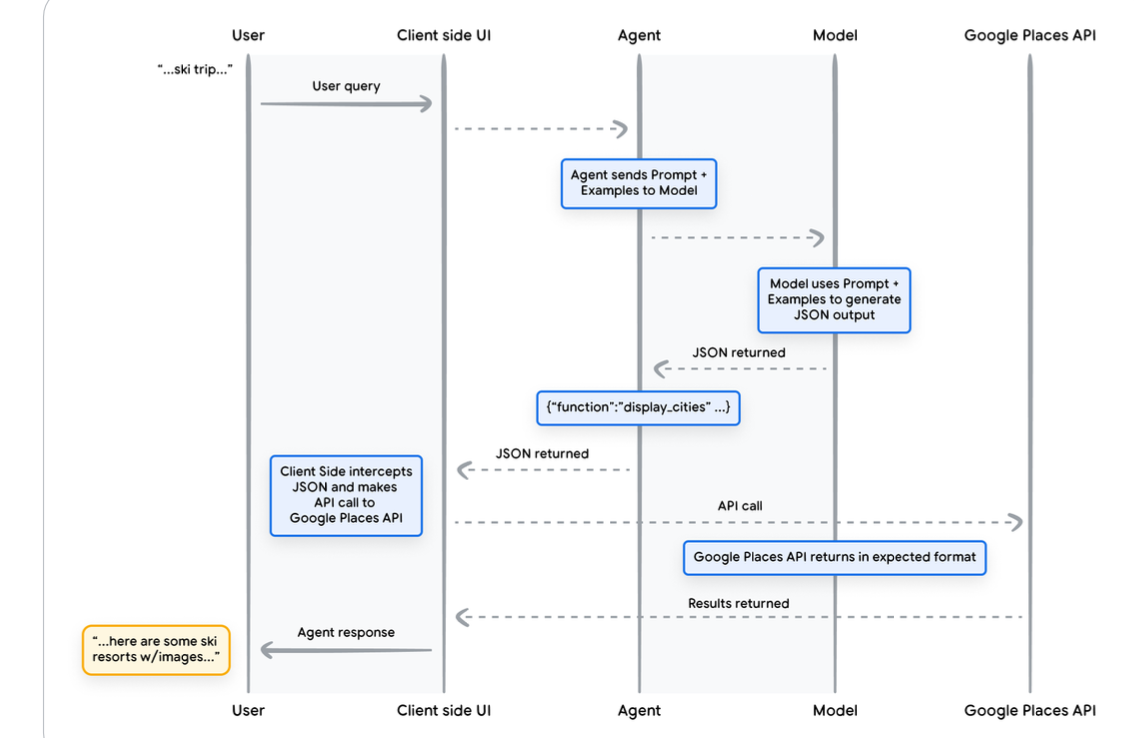

This allows for better API usage:

Ski Agent - External API Integration

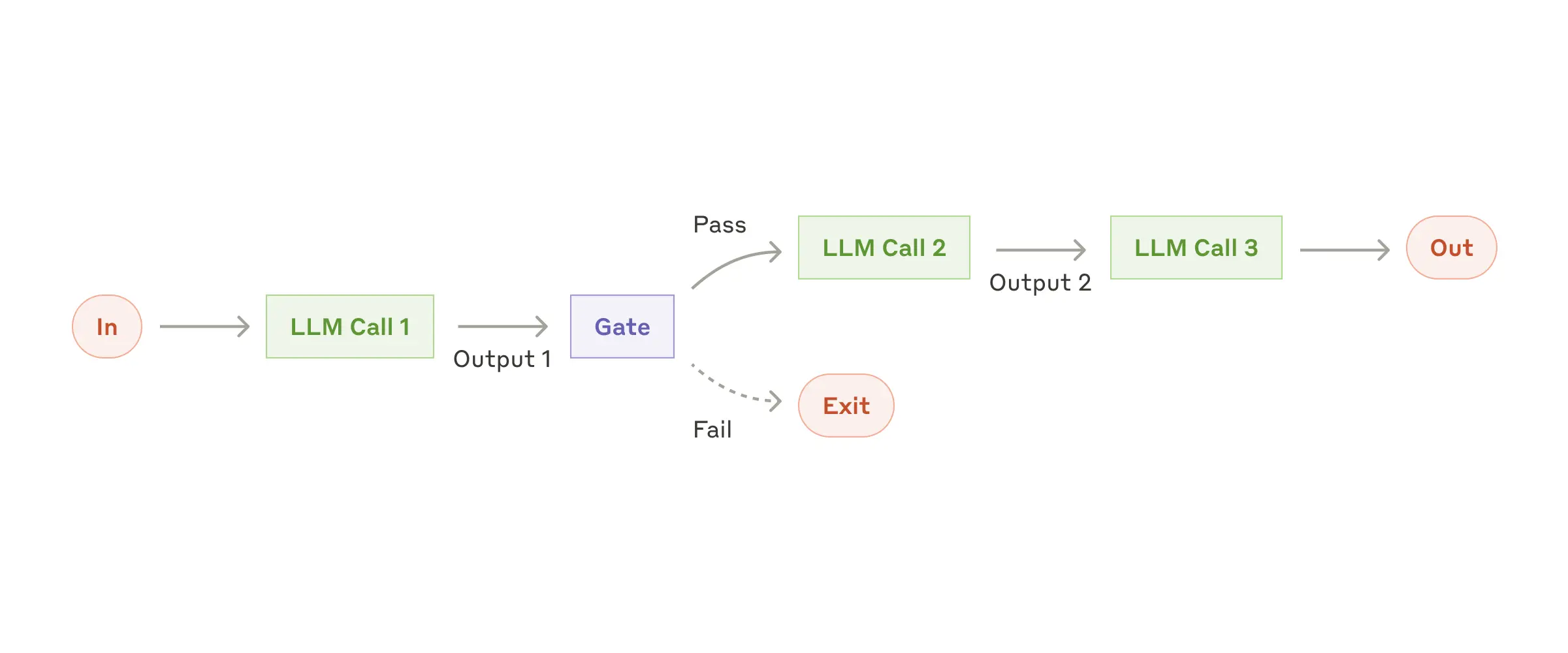

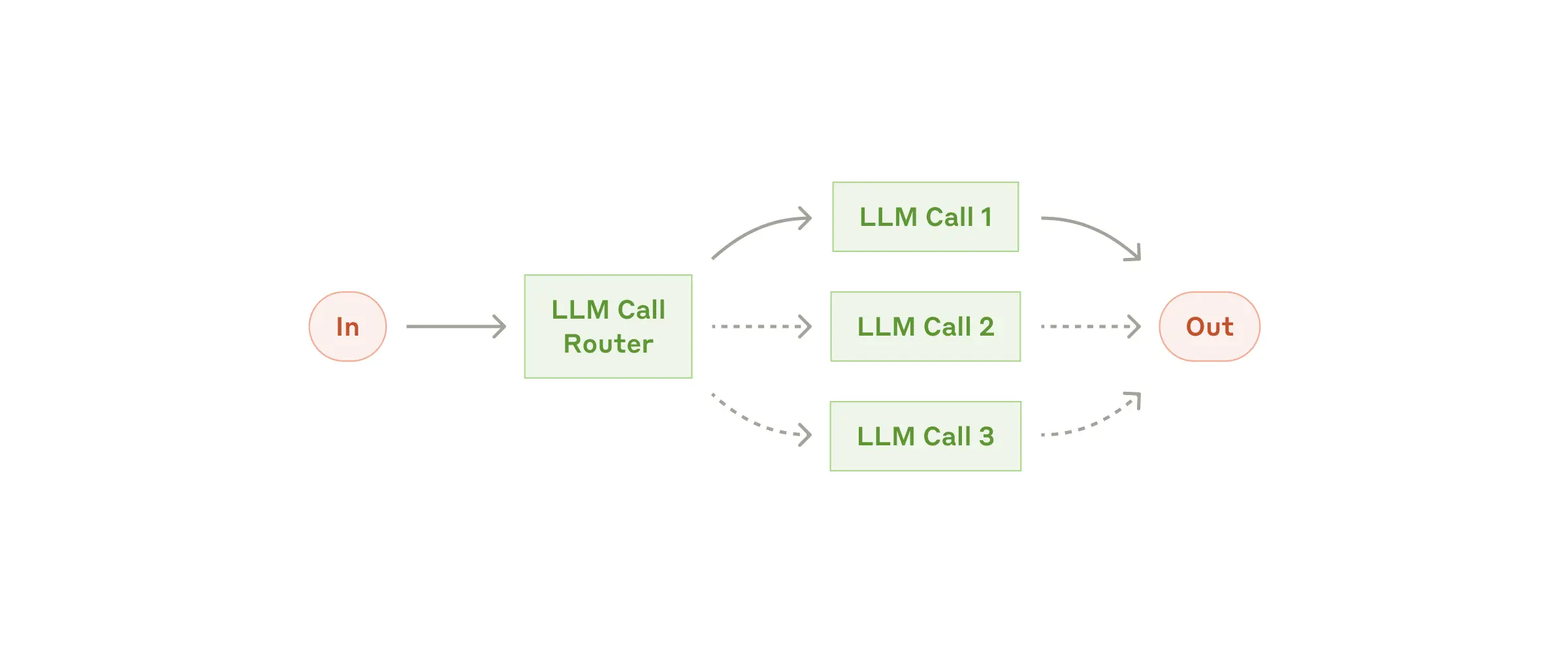

To integrate data stores and APIs in an agent system, developers build workflows using LLMs and tools. Examples include:

Prompt chaining with programmatic checks

Routing

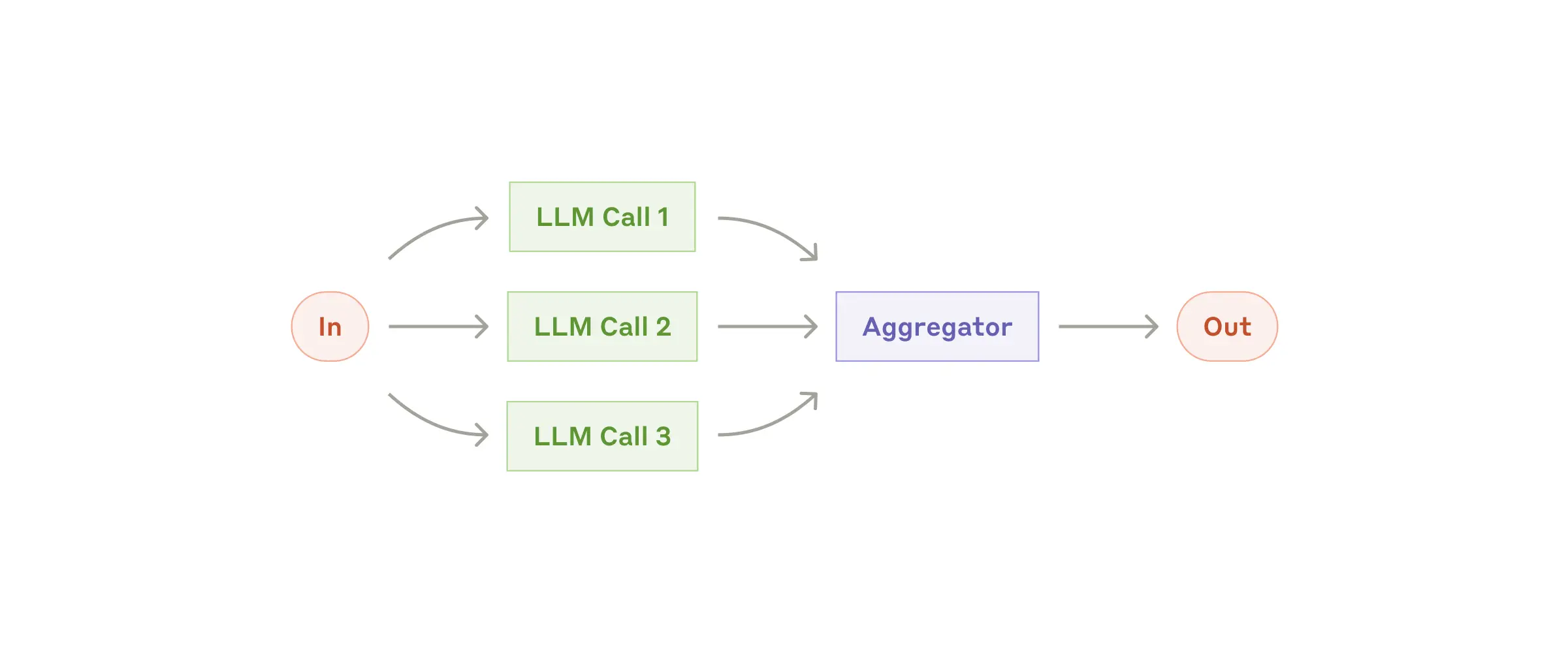

Parallelization

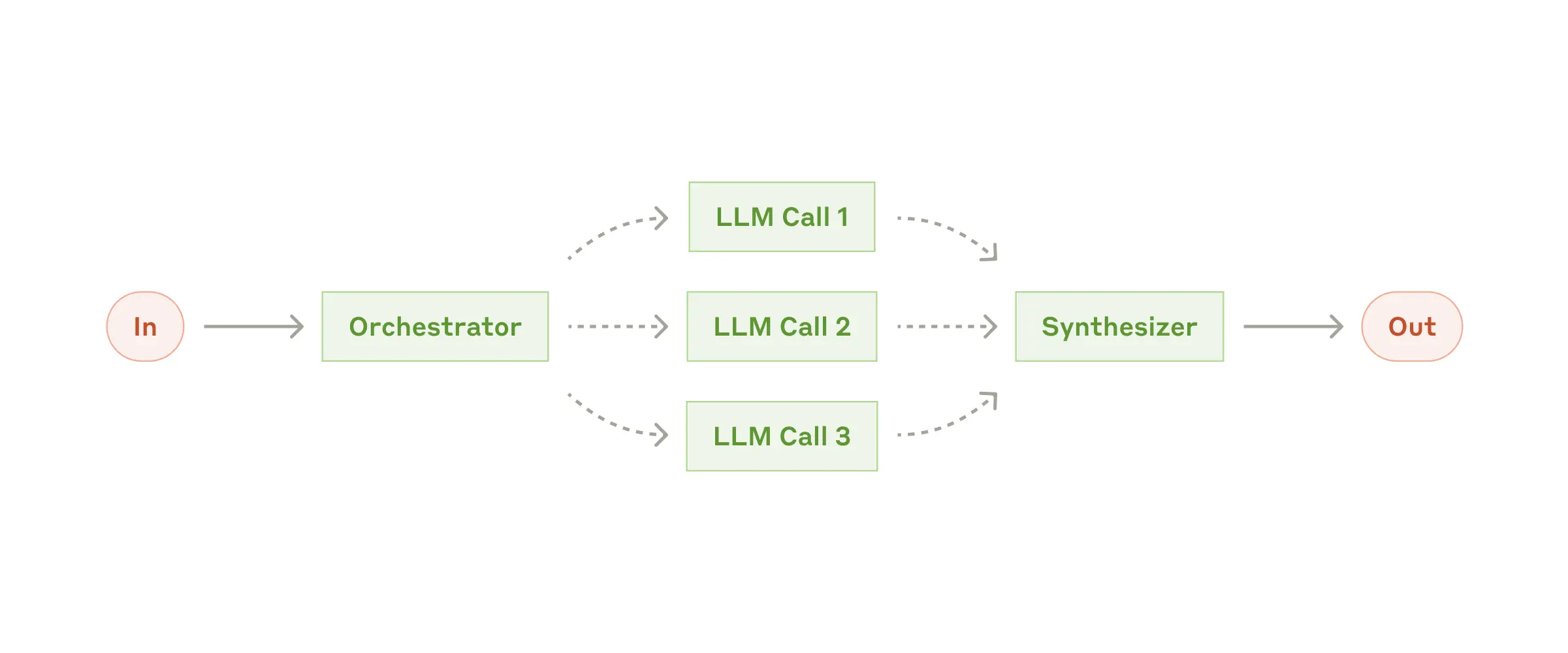

Orchestrator-workers

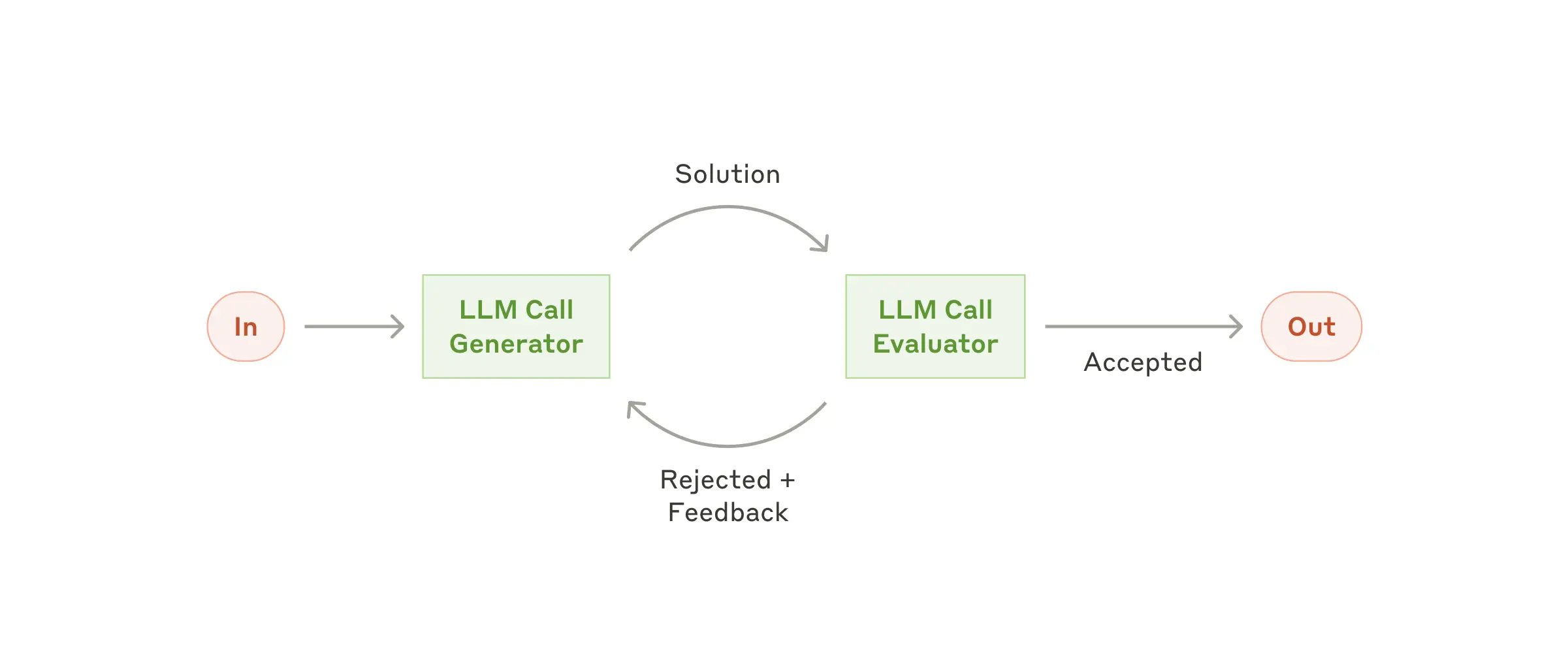

Evaluator-optimizer

The Model Context Protocol (MCP) establishes secure connections to external systems like content repositories and business tools, ensuring models produce relevant, safe responses.

Agents interact with their environments as “text games,” receiving textual observations and producing textual actions.

Strategies to improve model tool selection: